Core concepts

Point in time

When creating datasets will tabluar data suffer from an unique problem. Here may we need to combine multiple sources into one flat table, and use this as input. But this creates a problem.

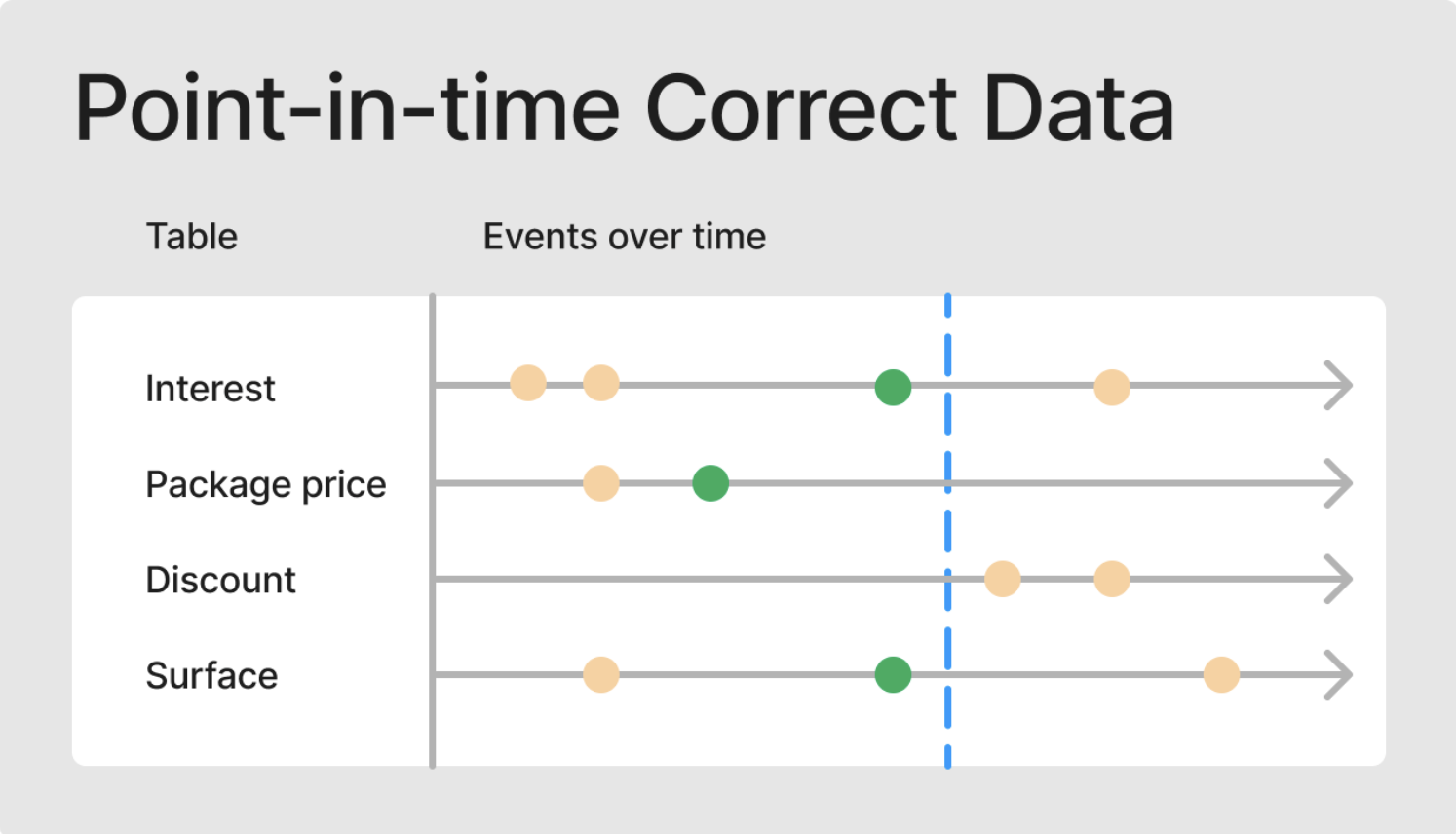

Because it can be hard to know which data is valid at different points in time. One such example could be to know what the average viewing time in the past week have been. However, if we compute the feature from the incorrect point in time, will this effect the model performance. This is why we need to make sure we have point in time valid data, shown in the image bellow.

Showing how data updates over time, and point in time data needs to select data based on an event timestamp (shown as the blue line). Therefore, we can not use the data after the event timestamp in our model.

This can be an easy mistake to make, and it can be extremely hard to notice, as it can be a silent bug.

Event Timestamp

Features are often subject to updates over time. To facilitate retrospective analysis and select features available at the intended prediction time, it is crucial to identify the timestamp to filter on. This is known as the event timestamp.

Therefore, making it possible to filter out features that was non-existing at inference time, or compute aggregations in a time window.

Setting an event timestamp is easy.

from aligned import EventTimestamp, Int32, FeatureView

@feature_view(...)

class Loan:

loan_id = String().as_entity()

created_at = EventTimestamp()

You can now add an event timestamp in when asking for features to filter out data that would not be availible at the time our prediction would happen. Therefore, mitigating the posibility of data leakage.

entities = {

"loan_id": [1, 2, ...],

"event_timestamp": [

datetime(year=2022, month=6, day=1),

datetime(year=2022, month=6, day=2),

...

]

}

features = await store.feature_view("loan")\

.features_for(

entities,

event_timestamp_column="event_timestamp"

).to_polars()